SAEDNEWS: In the midst of the Olympic excitement, researchers at Edith Cowan University tasked the AI-powered image generation platform, Midjourney, with producing images of the Olympic teams from 40 different nations.

According to SAEDNEWS: Strangely, the AI tool portrayed the Australian team with kangaroo bodies and koala heads, while the Greek team was illustrated in ancient armor.

Researchers set Midjourney the task of generating images representing Olympic teams from 40 nations, including Australia, Ireland, Greece, and India.

The resulting images exposed various biases ingrained in the AI's training data, reflecting aspects such as gender, events, culture, and religion.

Men were depicted in the images five times more frequently than women, and several teams, including those from Ukraine and Turkey, featured only male athletes.

Among the athletes across the 40 images, 82% were men, while just 17% were women.

The researchers also identified a significant bias related to events.

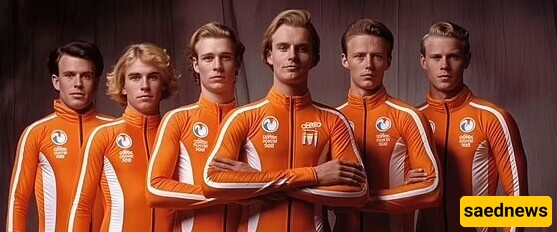

The Canadian team was represented as hockey players, Argentina as footballers, and the Netherlands as cyclists.

This suggests that AI tends to stereotype countries based on their more internationally recognized sports.

In terms of cultural representation, the Australian team was unusually depicted with kangaroo bodies and koala heads, while Nigeria's team wore traditional attire, and Japan's athletes were shown in kimonos.

Religious bias was also apparent in the Indian team's portrayal, where all athletes were depicted with a bindi, a symbol primarily associated with Hinduism. The researchers stated, "This representation homogenizes the team based on a single religious practice, ignoring the religious diversity present in India."

The Greek Olympic team was oddly illustrated in ancient armor, and the Egyptian team appeared in attire resembling that of pharaohs.

Facial expressions of the athletes varied significantly across teams. The South Korean and Chinese teams were shown with serious looks, while the Irish and New Zealand teams were depicted smiling.

Dr. Kelly Choong, a senior lecturer at Edith Cowan University, remarked, "The biases in AI stem from human biases that shape the algorithms, which the AI then interprets literally. Human judgments and biases are presented in AI as if they were factual; the lack of critical thinking and evaluation means the validity of this information isn't questioned, only the task itself."

Dr. Choong emphasized that these biases could lead to issues of equity, harmful generalizations, and discrimination.

With society increasingly depending on technology for information, these perceptions may create real disadvantages for individuals of diverse identities, he noted.

"A country's association with specific sports can lead to the belief that everyone in that nation excels in those activities — for instance, Kenya with running, Argentina with football, and Canada with ice hockey. These distorted 'realities' can become ingrained in individuals who believe such stereotypes and may inadvertently reinforce them in real life."

The researchers hope their findings will inspire developers to enhance their algorithms to mitigate these biases.

"Technology will evolve to improve its algorithms and outputs, but it will continue to focus on completing tasks rather than providing an accurate representation," Dr. Choong stated.

"Society will need to question the validity of AI-generated information and critically assess its accuracy."

Educating users will be crucial for the coexistence of AI and the information it generates, as well as empowering individuals to challenge its outputs.