The Ray-Ban Meta smart glasses have received a major AI-powered upgrade, enabling them to see and hear like never before.

According to Saed News, citing Fararu, Meta’s latest AI advancements have made the Ray-Ban Meta smart glasses much more interactive. These glasses can now assist with outfit choices, translate text, describe photos, and identify objects in real-time.

Meta, formerly known as Facebook, has introduced several exciting new features that make its smart glasses more practical and engaging. The company is currently testing a "multimodal" AI assistant that can answer user questions based on what the glasses see and hear through their built-in camera and microphone.

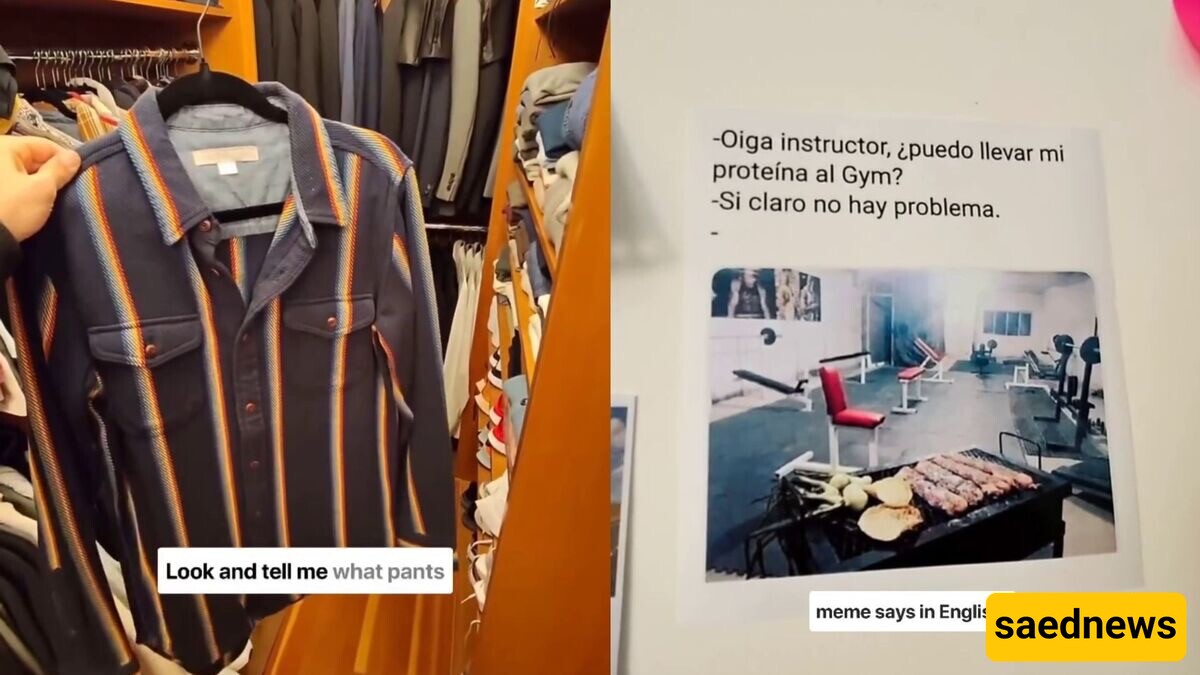

Meta CEO Mark Zuckerberg demonstrated some of these capabilities in an Instagram Reel, asking the glasses to suggest pants that would match the shirt he was holding. The AI analyzed the shirt's color and pattern, then recommended two suitable options.

Similarly, Meta’s Chief Technology Officer, Andrew Bosworth, showcased how the AI assistant could translate text, summarize information, and recognize objects. For instance, he pointed the glasses at a California-shaped wall art, and the assistant correctly identified it and provided information about the state.

Imagine you're in a boutique, holding a stunning emerald blouse, but unsure what pants to pair with it. Instead of scrolling endlessly through Pinterest, you simply whisper, "Hey Meta, what pants go with this?" The glasses scan the blouse, analyze its color and style, and instantly provide personalized fashion advice—just like having a personal stylist on call.

Struggling to understand a foreign menu? The glasses can translate it instantly.

Visiting a museum? They can describe the artwork or artifacts in front of you.

Need help with Instagram captions? The AI can analyze your photos and suggest engaging descriptions.

Currently, this AI-powered technology is only available to a select group of tech-savvy usersin the United States. However, speculation is growing about a future where smart glasses become personal assistants, fashion consultants, translators, and knowledge hubs—all in one. Imagine combining Siri, Alexa, and Google Assistant—right on your face.

The glasses capture an image, send it to the cloud for processing, and then respond to voice queries.

Users need to give specific voice commands to activate the assistant, such as "Hey Meta, look at this," followed by their question.

Responses take a few seconds, as the AI analyzes the image and processes the request.

Captured photos and responses are stored in the Meta View app on the user’s smartphone, making it a useful tool for taking notes, learning, and exploring the world.

With its AI-powered multimodal assistant, the Ray-Ban Meta smart glasses are shaping up to be an essential tool for shopping, traveling, learning, and entertainment.